How effective are insurers in sorting through the mounds ofinformation they possess to make insightful decisions?

|Since insurers are intrinsically in an information business,itstands to reason that data collection and analysis is ofparamount importance. Historically, insurers have struggled totrust decisions based off analysis of data from their legacysystems because of deep concerns about the quality of the data.And, as carriers bring online more and modern software systems, thedata within them can accumulate at a mind-blowing, geometricrate.

|An enormous pile of information can be very intimidating, butcarriers can find effective and efficient ways to take advantage ofthe valuable secrets lurking within. Here are two keyareas where certain, specific practices and technologies canmarkedly increase an insurer's odds of data analysis success.

|Capture

|Systems must support data capture at appropriate levels ofgranularity, with tunable mechanisms, and in a suitably structuredform. Granularity is a bit of an art form: systems mustcapture information at the right level of detail to optimizespecific operational business processes as well as support desiredanalyses. Too much decomposition creates an unmanageableexplosion of information (lots of heat, not enough light), butovergeneralized models will allow important discriminators ordecision drivers to hide below the surface.

|Our experience is that carriers benefit from starting modelsthat are well-normalized and can act as an industry "best-practicebaseline," but then data within each line of business, region, andchannel usually deserves some refinement. This means thata viable software system must provide a comprehensive ability totune the models in order to provide capture flexibility, and enablean insurer to iteratively differentiate its offerings, pricing andservices.

|Finally, the data itself must be structured in amachine-interpretable way in order for software to do meaningfulprocessing and to support downstream analysis. Systemsthat provide models that are carefully elaborated encourage insurerstaff, producers, and, in today's era of self-service, customers toinput source data in higher quality, structured ways versus manual"free form" content that is much harder to analyzelater.

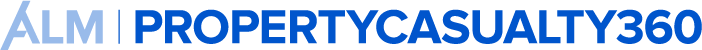

|Moreover, systems that provide for more advanced forms ofstructural encoding, like geospatial content and synthetic datatypes, create opportunities for carriers to stretch theirrepresentational thinking and achieve even higher capture fidelity.This emphasis on quality data in provides the essential foundationfor quality decision insights out.

|Leverage

|The data within core transactional systems is the lifeblood ofan insurance carrier, but the laws of physics impose performancelimits on the kinds of calculations and analysis that can be donein situ in operational data stores. Well-designed systems do provide a variety of in-line analysis andaggregated views into transactional data, but the mostdata-insightful carriers have grown entire ecosystems around theircore systems, linking and feeding downstream analysis tools,frameworks, and repositories.

|For example, carriers often choose to feed a traditional datawarehouse, but some will go further and use a transportarchitecture that also feeds certain content to a NoSQL store or afaceted text search engine for real-time queries. Thefield is evolving so quickly that it is at least as important foran agile insurer to design for a dynamic set of data analysiscomponents as it is to get any particular analytics path perfectlyright. And for that even to be possible, of course, it isincumbent upon carrier core systems to be flexible, configurableenablers of this brave new data world.

Want to continue reading?

Become a Free PropertyCasualty360 Digital Reader

Your access to unlimited PropertyCasualty360 content isn’t changing.

Once you are an ALM digital member, you’ll receive:

- All PropertyCasualty360.com news coverage, best practices, and in-depth analysis.

- Educational webcasts, resources from industry leaders, and informative newsletters.

- Other award-winning websites including BenefitsPRO.com and ThinkAdvisor.com.

Already have an account? Sign In

© 2024 ALM Global, LLC, All Rights Reserved. Request academic re-use from www.copyright.com. All other uses, submit a request to [email protected]. For more information visit Asset & Logo Licensing.