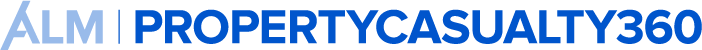

Employers are responsible for vetting potential bias in AI-based hiring tools. (Credit: Yeexin Richelle/Shutterstock)

Employers are responsible for vetting potential bias in AI-based hiring tools. (Credit: Yeexin Richelle/Shutterstock)

The U.S. Equal Employment Opportunity Commission (EEOC) has released guidance on how the abundance of software and artificial intelligence (AI) tools allowing employers to hire and assess candidates (with little to no human interaction) may be violating existing requirements under Title I of the Americans with Disabilities Act (ADA). Employers are responsible for vetting potential bias in AI-based hiring tools and the EEOC warns of three common applications where these tools could violate the ADA. For example:

- Failing to provide a reasonable accommodation necessary for an applicant to be evaluated fairly by an algorithm or AI-based tool.

- Using a decision-making tool that "screens out" an individual with a disability by preventing the applicant from meeting selection criteria due to a disability.

- Using a decision-making tool that incorporates disability-related inquiries or medical examinations.

The guidance provides other practical steps for reducing the chances that algorithmic decision-making will screen out an individual because of a disability, including:

- Informing applicants that reasonable accommodations are available.

- Providing alternative testing or evaluation if an applicant has previously scored poorly due to a disability.

- Providing applicants information about algorithmic hiring tools that are used, including the traits or characteristics measured and any disabilities that may negatively impact an applicant's result.

- Basing traits and characteristics that are evaluated by AI on necessary job qualifications.

- Selecting algorithmic evaluation tools designed with accessibility for individuals with disabilities in mind.

Lauren Daming, Greensfelder attorney and CIPP says: "While the EEOC's new guidance is a big step toward helping employers evaluate their hiring practices for potential disability bias, it leaves many issues unaddressed. For example, the guidance recognizes that algorithmic decision-making tools may also negatively affect applicants due to other protected characteristics such as race or sex, but the guidance is limited to disability-related considerations alone."

Want to continue reading?

Become a Free PropertyCasualty360 Digital Reader

Your access to unlimited PropertyCasualty360 content isn’t changing.

Once you are an ALM digital member, you’ll receive:

- All PropertyCasualty360.com news coverage, best practices, and in-depth analysis.

- Educational webcasts, resources from industry leaders, and informative newsletters.

- Other award-winning websites including BenefitsPRO.com and ThinkAdvisor.com.

Already have an account? Sign In

© 2024 ALM Global, LLC, All Rights Reserved. Request academic re-use from www.copyright.com. All other uses, submit a request to [email protected]. For more information visit Asset & Logo Licensing.