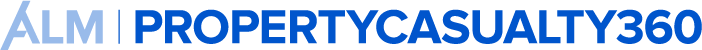

Theresa Benson, with InRule Technology. Courtesy photo

Theresa Benson, with InRule Technology. Courtesy photo

Artificial intelligence (AI) has changed how insurers price the risk associated with an account, in many ways for the better. But, says InRule Technology's Senior Product Marketing Manager Theresa Benson, the promise of automation has led to a new concern: bias in the AI algorithms and scoring models, which has led to some unexpected and unfair outcomes.

"I recently read about an alarming example of this. In some instances, risk calculations and algorithmic models resulted in a person with a driving under the influence (DUI) conviction on their driving record to have a lower auto insurance premium than someone without one," notes Benson, pointing out that this is in large part due to having a better credit score. In another example, Benson says marketplace disparities can cut across gender lines. "In Oregon, for example, researchers found that women were charged $100 more than men for basic auto insurance – an 11.4% penalty."

This disconnect has led some state legislatures to act. Last year, Washington and Oregon sought to ban the use of credit-based insurance scoring mechanisms to set premiums. They argued that insurers' credit-scoring models result in some groups of people paying higher premiums, even if they present less of a risk. More recently, Colorado introduced legislation requiring insurers to test their algorithms and scoring models to uncover biases, which were previously ignored or blindly accepted.

"I certainly don't believe insurers are intentionally using bias to set insurance premiums," says Benson. She cites several contributing factors underpinning bias in insurance ratings, starting with the sheer amount of data available and that it can be inherently biased. "Finding causal data is key – it's important to get deeply aware of the relationship between data and outcomes. It's also important to note that bias can be introduced all along the path that data traverses, even before it makes it into models that are used in decisioning, machine learning models or calculations. It's challenging for enterprises to examine their infinite amount of data for ways it may introduce bias, and in many cases, they don't have the tools to track and mitigate potential bias, either."

AI, in the form of declarative and predictive logic, also sits at the center of the issue. It's been a boon to insurers, used for automating areas of underwriting, claims processing and fraud detection. With this type of AI, data goes in, predictions are made and utilized by automated business logic, and decisions come out. But the processes between input and output aren't always transparent. Complicating this is that machine learning algorithms, because they're constructed from potentially biased data, can unintentionally be constructed in a way that absorbs data-based biases, human biases or historical situational ignorance, Benson points out. So, if all that an insurer bases its ratings on is a prediction and a confidence level generated by these "black box" machine learning algorithms, how can it be sure that a protected characteristic, or a proxy of a protected characteristic, wasn't used in a harmful way to arrive at an outcome?

Benson says the only way to mitigate insurance ratings bias is with a decision platform like InRule that provides transparency across the AI technologies in the value chain. "InRule can capture every factor influencing and the outcomes of decision-making that runs on our platform," says Benson. "But we don't leave it at that: our machine learning technology also captures and presents all the data that contributed to a prediction, so that insurers can identify when or if bias was part of the decision outcome and adjust their logic to respond accordingly." Plus, the platform enables the output of this information for later retrieval and examination, if needed or required by regulators.

As insurers increasingly turn to AI to automate even more insurance processes, they will need to pay closer attention to the potential for insurance ratings bias in machine-learning models. Eliminating bias in our country is a step forward that's long overdue, and the insurance industry can do its part by using technology the right way.

© 2024 ALM Global, LLC, All Rights Reserved. Request academic re-use from www.copyright.com. All other uses, submit a request to [email protected]. For more information visit Asset & Logo Licensing.